Systems Engineering (SE) practitioners and researchers have claimed many benefits of applying SE including improved product definition and development and enhanced performance of businesses. It is no secret that applying a SE process to development can mean a slightly longer problem definition phase, more rigorous verification and validation and overall just an increase in resource allocation to certain areas. Is the investment really worth it? This paper highlights two pertinent studies on the effectiveness of systems engineering in pursuit of the answer.

Study 1: The Case for SE in the New Product Development

In 2006, the material science experts, Corning, and the esteemed university, Cornell, embarked on a research effort to uncover the use of SE and its effectiveness. This study emerged as a result of the growing need for more substantial research on the effectiveness of Systems Engineering in New Product Development (NPD) environment. Vanek et al. addressed the gap in research by testing the SE impact across multiple projects within Corning Incorporated (2017). A team from the Systems Engineering Directorate at Cornell University conducted interviews with 19 system engineers (SEs) and project managers (PMs) between April 2008 and March 2009. These interviews evaluated the depth to which the SEs and PMs used systems engineering, the techniques used and the effectiveness of the range of techniques in application. The study tested for application and effectiveness in the areas of market analysis, requirements engineering, validation/verification and trade studies. Corning works on a range of products with the hope of having a breakthrough with one of outputs, thereby leading to significant revenue streams (Corning Systems Engineering Directorate, 2009).

Previous studies of the effectiveness of SE in the NPD area failed to assess effectiveness over a range of projects, nor did they look at the performance of projects longitudinally, i.e. over a substantial period of time. The study was conducted as follows: Corning selected the NPD project for study and Cornell conducted impartial research through a three-phase process of preparation, interview and post-processing (Vanek, et al., 2009). Research-related precautions that were taken during the study[1]:

- The study focused on observable practices, not knowledge of SE terminology i.e. tested for techniques rather than the naming assigned to the technique or knowledge of applying it.

- Questions were asked independently to avoid interviewees skewing results based on opinion or desire to promote or discourage a particular technique.

- The Corning SE group took care to not generate an opinion about the performance of the project that may influence the manner in which questions were asked in interviews, and interviewers were instructed to only make interviews for information-gathering i.e. interviewers were not to pass judgement about the project and whether it was successful or not.

[1]A description of the study is found in Vanek, F., Jackson, P., Grzybowski, R. and Whiting, M. (2017) Effectiveness of Systems Engineering Techniques on New Product Development: Results from Interview Research at Corning Incorporated. Modern Economy, 8, 141-160. https://doi.org/10.4236/me.2017.82009

Areas of SE content were chosen from Honour and Valerdie’s ontology of SE which are well reflected in the main SE standards including ANSI-EIA/632, IEEE-1220, ISO-15288, CMMI, and MIL-STD-499C which included the content indicated in Table 1 (2006).

Table 1: SE Category and Related Content Guiding Questions in the Interviews

| SE Category | Typical Content |

| Market analysis | Define customer

Define customer needs |

| Requirements Engineering | Requirements development, based on customer input

Requirements tracking |

| Technical analysis | Functional analysis

Tradeoff analysis |

| Verification & validation | System verification & validation

Design for testability |

For each of these areas, interview questions were crafted to detect the presence or absence of the content identified within the NPD process (a total of 14 questions were asked) including:

- “What is the most recent version of the value proposition for the product that you have presented, for example at the last progress or stage gate review?”.

- “What evidence can you present of market analysis, including total market size, market segmentation (by geography or customer type), target share, and/or market testing”.

Interviewers were then asked to provide evidence such as figures, survey responses from customers and comparisons with other products as evidence of support. Based on the evidence provided a score from 0 to 1 was assigned and tallied for each area. Assessment of the project performance involved evaluating internal project performance based on two parts: verification of internal measures, and, an assessment of the ability of the project to meet the expectations of the stakeholders. Projects were deemed satisfactory, superior or falling short based on these measures. In the case of NPD, a satisfactory or superior project would necessarily have been brought to market, as projects that do not perform well are removed from resource allocations earlier in the development process. Short-falling projects could be ones that made it to market but did not realize commercial success.

The interviews

Interviewees spanned a range of experience from less than 10 years to more than 30 years. The projects were selected based on the desire to study a range of projects that would provide breadth of data. Selection was also impacted by availability of project leaders to be interviewed. The 19 projects were representative of the five major market areas in which Corning actively works.

The results

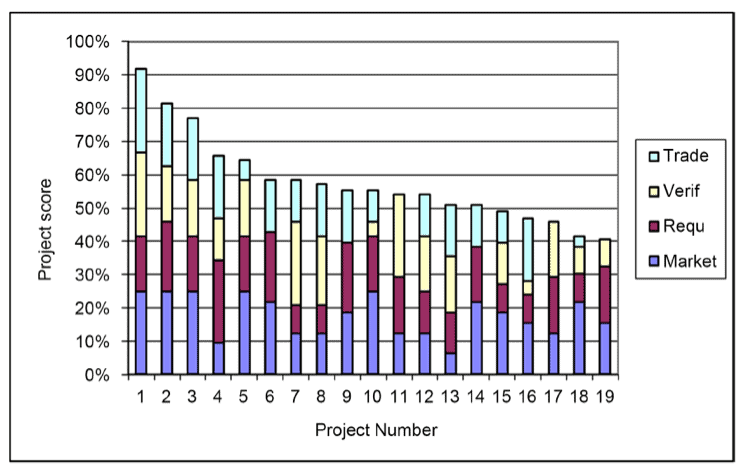

The processed results of the interviews showed that the projects ranged in amount of SE use from 41% to 92%. A description of the results follows.

Figure 1: Comparison of percent of total possible points earned by numbered project, including contribution from SE category (Vanek, et al., 2017)

Notes for the figure: ‘Trade’ = technical analysis, ‘Verif’ = verification & validation, ‘Requ’ = requirements engineering, ‘Market’ = market analysis.

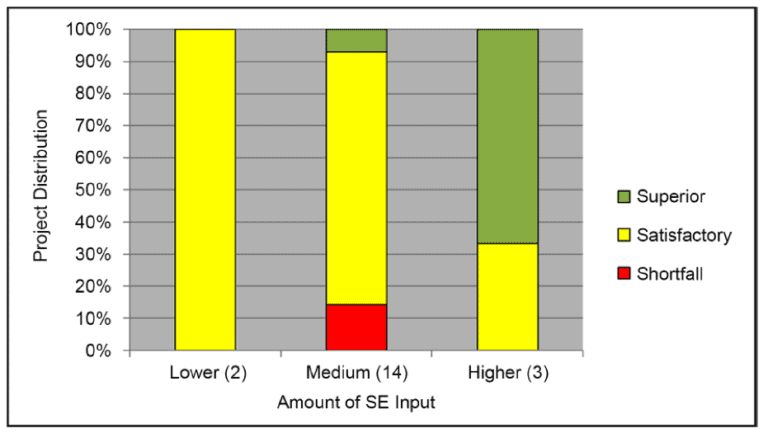

The 19 projects were divided into lower, medium and higher levels of application of SE either one standard deviation below or above the mean, respectively. The mean was 59% application, the ‘lower’ threshold was 46% and the ‘higher’ threshold was 72%. Two projects had received low SE input, three had high input and 14 medium SE input.

Project performance was evaluated just after the interview or as soon as results become available. Of the 19 projects studied, 14 had satisfactory performance, 2 had shortfalls and 3 were superior. The finds supported the notion that generally superior projects had high SE input and projects that fall short in some areas typically had shortcomings in level and quality of SE application (Vanek, et al, 2017). The graph illustrates that superior projects occur only for medium and high levels of SE and that the quantity of superior projects increases substantially with increasing levels of SE input. The study concluded that schedule and budget adherence are not definitive indicators of project success since a need for an increase in budget and an extension to the schedule may indicate rising interest, for example, thus other evidence such as evidence of high profitability and rapid growth in customer interest were used as indicators of project performance. The measure was thus a qualitative evaluation.

Figure 2: Project performance as a function of overall SE input, for lower, medium, and higher SE input projects. Note: number in parenthesis shows how many projects fell into the category bar, e.g., 2 projects in the ‘lower SE input’ category, etc. (Vanek, et al., 2017)

Some takeaways from the research (Vanek, et al., 2017):

- SE leads to project excellence some but not all of the time. In NPD, project success is affected by factors other than SE input.

- The short-falling projects did not have poor SE input in all the four studied areas but rather showed critical failures in developing verification and validation requirements and in execution

- Failure to conduct a thorough market analysis and provide a strong value proposition often led to shortfalls

- Keeping projects going beyond the point where results started to look unpromising also resulted in poorer performance

- Technical analysis is valued in the organization, but market analysis, requirements and V & V were more influential.

Some additional takeaways emerged from the interviews (Vanek, et al., 2017):

- Applying SE later in a project can be better than not at all.

- To get buy-in from the organization, a value proposition for SE must be advanced.

- One of the challenges in implementing SE is that there is often a need to conduct market analysis during product development (rather than before), as sometimes waiting for the market research results can mean starting development when it is already too late, from a competitive perspective.

- Another challenge is that resources are not always allocated to SE activities if it is not seen by stakeholders as a priority.

- Planning and scheduling for a new product is difficult, as sometimes one does not even know what it is one going to test – sometimes there genuinely is not enough known to apply design for testability.

Overall the study showed that there is evidence to support the notion that SE application improves project performance within the NPD environment.

Study 2: The Business Case for SE

One of the more popular studies on the effectiveness of systems engineering was a study conducted in 2012 as a collaboration between the National Defense Industrial Association (NDIA) Systems Engineering Effectiveness Committee (SEEC), the Software Engineering Institute (SEI) of Carnegie Mellon and the Institute of Electrical and Electronics Engineering Aerospace and Electronic Systems Society (IEEE-AESS). The study emerged after SE managers were noted to have made assumptions about system requirements, technology and design maturity that were overly optimistic (Elm & Goldenson, 2013). This tendency to be overly optimistic about the quality of SE being conducted within the projects was associated with a lack of disciplined systems engineering analysis prior to initiating development of the system. In this study, a survey of several projects and programs executed by system developers reached through the National Defense Industrial Association Systems Engineering Divisions (NDIA-SED), IEEE-AESS and the International Council on Systems Engineering (INCOSE was conducted (Elm & Goldenson, 2012). The objective of the survey was to identify SE best practices, collecting data about the practices and then searching for relationships between SE practices and project performance.

The survey

The SEEC surveyed a sample of major government contractors and subcontractors. The artifact-based questionnaire itself was developed using the SE expertise of the SEEC members and the data on the survey was collected by Carnegie Mellon® SEI. The survey assessed the project’s Systems Engineering Capability (SEC) as measured according to the implementation of SE best practices. Project performance was assessed based on how well the project met cost, schedule and scope expectations. Other factors taken into consideration included Program Challenge and Prior Experience, as some projects are more complex than others while acquirers have different levels of capability (Elm & Goldenson, 2012)

The results

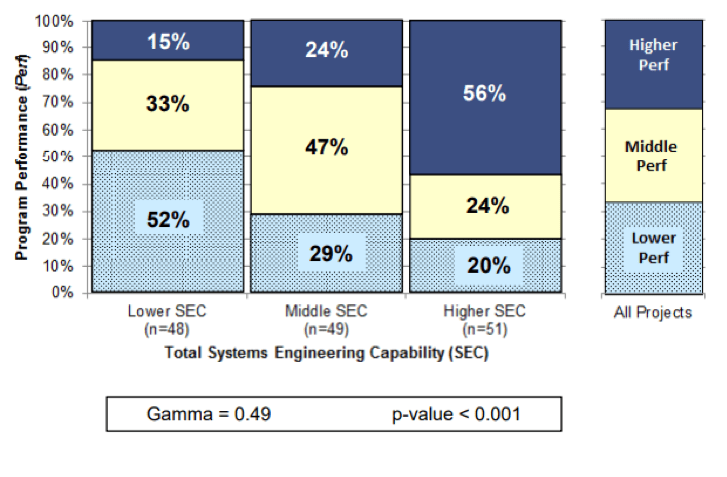

Figure 3: Program Performance vs. Total SE (Elm & Golderson, 2012)

Across all projects, for lower SEC, only 15 % deliver high performance, for middle SEC only 24% and for higher SEC 57% deliver higher performance. Gamma of 0.49 shows a very strong relationship between program performance and total SE.

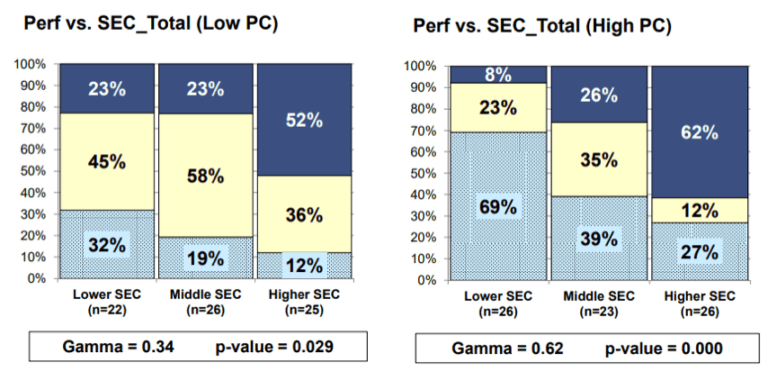

Figure 4: Side-by-side comparison of Performance versus SEC for Low and High levels of Program Challenge

Comparing the program performance versus SEC for lower challenge and higher challenge programs, it is apparent that there is a strong relationship (gamma = 0.34) for lower challenge programs and a very strong relationship (gamma = 0.62) for higher challenge programs between total SE and Program Performance.

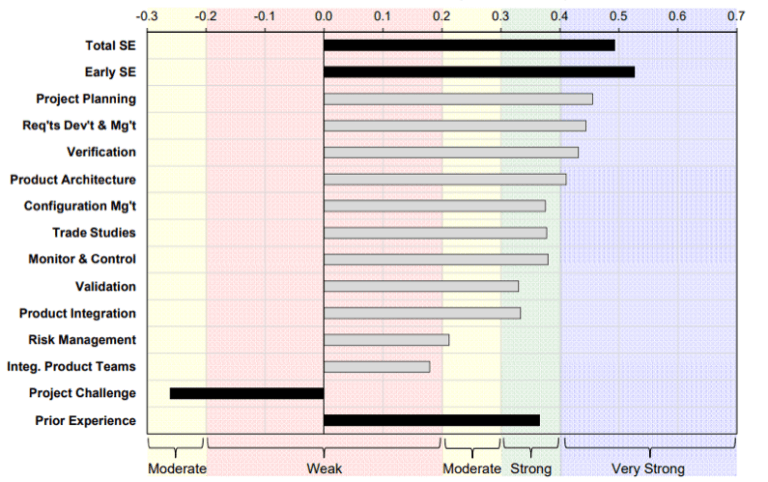

Finally, the study investigated the relationship between project performance and SE capability. The graph captures the strength of the relationship between conducting the SE activity and program performance. For most SE activities there is a moderate to very strong correlation between its implementation and program performance.

Figure 5: Performance vs. SE Capability – All Projects

Conclusion

Studies have shown that there is a strong business case for implementing SE, particularly in higher complexity projects. The sophisticated studies presented in this paper deliver a resounding message regarding implementing SE: SE, when applied well, increases the probability of superior project performance particularly for more challenging projects. There has been substantial research into the effectiveness of systems engineering. With a rising need to address complexity in product and organizational contexts, it is inevitable that further research on SE effectiveness will take place. This article highlights the impact of SE on program and project performance for different levels of project complexity as well as for new product developments.

References

Corning Systems Engineering Directorate (2009) Corning-Cornell Project to Evaluate Effectiveness of Systems Engineering Techniques: Final Report from a Literature Review and Interview Research. Corning Incorporated, Corning.

http://www.lightlink.com/francis/CorningReport2009.pdf

Elm, J. P., & Goldenson, D. (2013, May 14). Quantifying the Effectiveness of Systems Engineering.Retrieved from NDIA Storage: https://ndiastorage.blob.core.usgovcloudapi.net/ndia/2013/system/W131023_ELM.pdf

Elm, J., & Goldenson, D. (2012). The Business Case for Systems Engineering Study: Results of the Systems Engineering Effectiveness Survey.Software Engineering Inistitute, AESS. NDIA. Retrieved from http://ieee-aess.org/sites/ieee-aess.org/files/documents/SE%20Effectiveness%20Study-Nov2012-12sr009.pdf

Honour, E. and Valerdi, R. (2006) Advancing an Ontology for Systems Engineering to Allow Consistent Measurement. Proceedings of Conference on Systems Engineering Research, Los Angeles, 6-9 April 2006, 1-12.

Vanek, F., Jackson, P., Grzybowski, R. and Whiting, M. (2017) Effectiveness of Systems Engineering Techniques on New Product Development: Results from Interview Research at Corning Incorporated. Modern Economy, 8, 141-160.

https://doi.org/10.4236/me.2017.82009

Vanek, F., Grzybowski, R. and Jackson, P. (2009) Quantifying the Benefits of Systems Engineering in a Commercial Product Setting. Insight , 12, 37-38. https://doi.org/10.1002/inst.200912137